In the middle of the AI revolution, there are businesses and developers that remain on the sidelines, concerned about the privacy risks. Industries such as healthcare and finance are in a race to harness the power of AI without compromising data security. To address this challenge, they are turning to locally deployed language models.

If you’re considering testing the idea of running a local LLM, starting on your personal computer is a great first step. This guide walks you through the process of running an LLM on an average-performance laptop.

Local LLMs: Better Security and Customization

Running LLMs locally provides advantages that overcome some of the limitations of cloud-based services. One of the most important benefits is increased privacy. This is critical for industries that handle sensitive information, such as healthcare, finance, and legal services, where data privacy is essential.

In addition to privacy, local deployment of LLMs provides greater control over data and model customization. Users can tailor models to specific needs and preferences, fine-tuning them with proprietary data. This level of customisation is usually not feasible with cloud-based models, which are typically designed for broader, more generalised use cases. Local LLMs help businesses tailor solutions to their operational and strategic goals.

Local LLMs also allow developers to verify their ideas quickly and spend less money on proof of concepts. They can deploy LLMs and then use them with local APIs, which are usually compatible with the OpenAI format. This allows users to easily transfer the solution to commercial models like GPT in the future, with minimal changes to the code.

How to Choose an Open-Source LLM

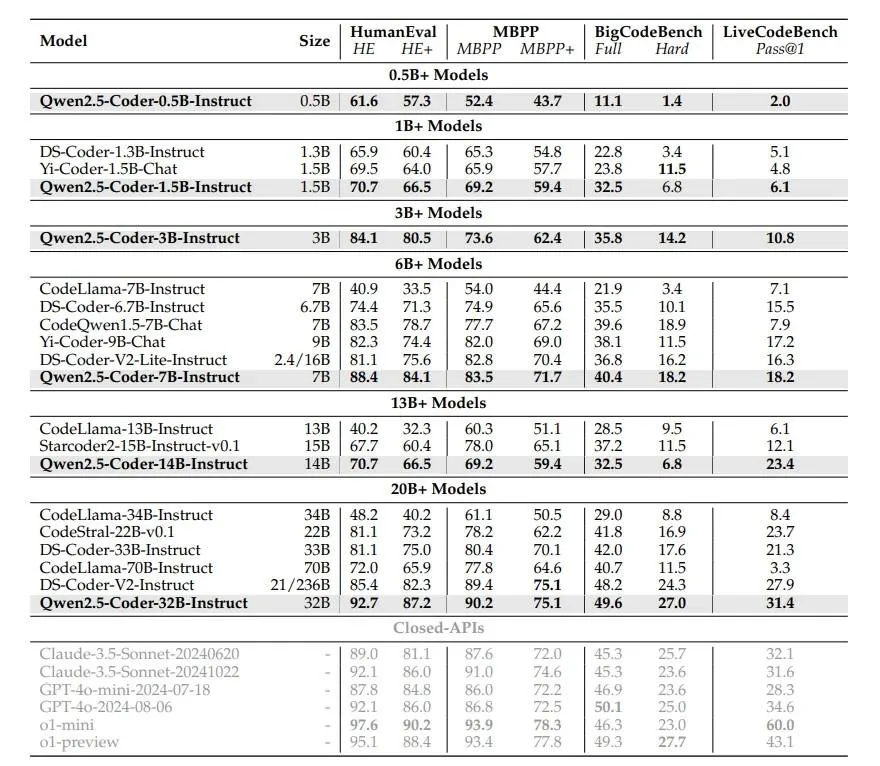

When choosing an open-source LLM for your use case, you normally start by looking at LLM benchmarks. There are several benchmarks that compare LLMs performance with various datasets and tasks, but I recommend the HuggingFace LLM Leaderboard. Before you choose the best LLM for your use case, you can take a closer look at the datasets it was tested on here.

After you select the preferred LLM, you can use different tools to run it locally. In this article, we will highlight two tools: Ollama and LMStudio.

Hardware Requirements

Is your laptop good enough for running an LLM? The answer depends both on the computing power of your laptop and the size of the LLM you want to use locally.

Case Study: Dell i7 vs M1 Pro

In my case, I tested this process on two laptops: a Dell Latitude 5511 which I use for work and a MacBook M1 pro I keep for personal use. The Dell Latitude 5511, with its Intel i7-10850H CPU and 16GB RAM, performs well for lightweight, CPU-bound tasks but lacks a dedicated GPU for accelerated processing. The MacBook Pro (M1 Pro), also with 16GB unified memory, leverages its integrated Neural Engine and GPU to handle moderate-sized models like LLaMA-2-7B efficiently.

The Verdict

For the models I tested on the Dell laptop, the response took up to 3 minutes to generate, while the Mac could do it in seconds. While the Dell Latitude suits more straightforward tasks, the M1 Pro excels in efficiency and performance, making it a better choice for sustained workloads.

Keep in mind your hardware limitations when choosing the best model for local deployment.

At this stage, it’s also important to know that there are different techniques to run an LLM, that allow your hardware to run bigger models with more minor computing power. I’ll discuss them later in this article.

Optimal Model Parameters

Usually, models are available with different amounts of parameters (1b, 7b, etc.). The number of parameters influences performance and hardware requirements, so let’s take a look at what to expect from each version.

1. 1B Models

- Size: ~1 billion parameters.

- Performance: Lightweight and suitable for general-purpose tasks like text generation, basic reasoning, and smaller datasets.

- Hardware requirements: Minimal; can run efficiently on systems with 16GB RAM, such as the Dell Latitude 5511, using CPU-based inference tools like llama.cpp.

- Use case: Ideal for resource-limited environments or applications requiring quick responses with less computational cost.

2. 7B Models

- Size: ~7 billion parameters.

- Performance: Significantly better at understanding context, reasoning, and handling complex tasks.

- Hardware requirements: Moderate; benefits from optimised hardware like the Mac M1 Pro’s unified memory or Neural Engine.

- Use case: Suitable for applications needing better accuracy and contextual awareness without requiring the computational power of larger models.

3. 32B Models

- Size: 32 billion parameters.

- Performance: Highly advanced, offering state-of-the-art capabilities in reasoning, detailed text generation, and multi-modal tasks (if supported).

- Hardware requirements: High; demands powerful GPUs or systems with 32GB+ RAM and optimised frameworks like ONNX, TensorRT, or CoreML for inference.

- Use case: Best for high-precision, enterprise-level applications, research projects, or when handling large, complex datasets.

Comparing Param Sizes

You also can compare the accuracy of the same models with varying parameter sizes, as performance might differ

For example:

According to this data, we can see that the Qwen2.5-Coder with 32 billion params performs much better than its 7B and 1B param versions.

Make the Final Choice

- Select 1B models for lightweight tasks or when hardware resources are limited.

- Opt for 7B models when balancing performance and hardware requirements, such as for detailed reasoning or tasks of medium complexity.

- Use 32B models only if you have the computational capacity and need cutting-edge performance for demanding workloads like AI research, large-scale NLP tasks, or enterprise-grade solutions.

For my local LLM experiments, I chose 7B models to be run on two laptops.

Quantisation Techniques for Optimizations

If your hardware is limited, you may want to consider running a quantised model.

Quantisation techniques can be used to optimize LLMs for local deployment, primarily by reducing model size and enhancing performance without significantly compromising accuracy.

Why You Need Quantisation

As LLMs grow in complexity and size, the computational and memory demands for running them locally can become prohibitive. Quantisation addresses these challenges by transforming the model’s parameters from high-precision floating-point numbers to lower-precision formats, thereby decreasing the model’s footprint and computational requirements.

How Quantisation Works

Quantisation compresses models to make them more manageable for local environments with limited resources. By converting 32-bit floating-point weights to 16-bit or even 8-bit integers, quantisation significantly reduces memory consumption and accelerates inference times.

To trace back to my own local LLM experiments, with quantisation I was able to use a 32B model (Qwen2.5-coder) on my local laptop.

Quantisation Trade-Offs

Despite the benefits, quantisation has its challenges. One of the primary trade-offs is the potential loss of model accuracy, especially in tasks that require high precision. The degree of accuracy loss depends on the model architecture and the specific quantisation method used. As the demand for running LLMs locally continues to grow, mastering quantisation will be a key skill for developers seeking to harness the full potential of these powerful models in diverse and resource-limited environments.

Where to Find Quantised Models

You can find a quantised version of different models on HugginFace. For example, here is the quantized version of Qwen/Qwen2.5-Coder-1.5B.

Quantised versions are also available on the Ollama and LMStudio (they use HugginFace as a model provider)

Running Models with Ollama

Ollama is an open-source tool designed specifically for this task: to run and manage large language models (LLMs) locally on your machine. You can download Ollama from its official website: https://ollama.com/.

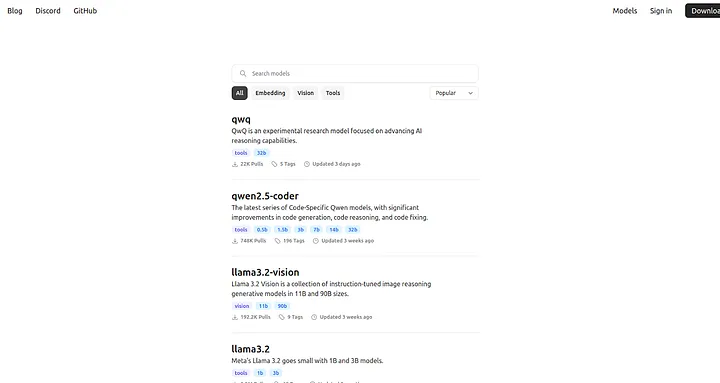

Choosing the Ollama Model

Ollama can run many models. To find the one that is best suited for your use case, take a look at their their model repository. There you will find versions of the same model with different parameters, as well as quantised versions of the models.

Options for Running a Model

Ollama’s documentation is quite extensive, but I want to highlight two options that work for me:

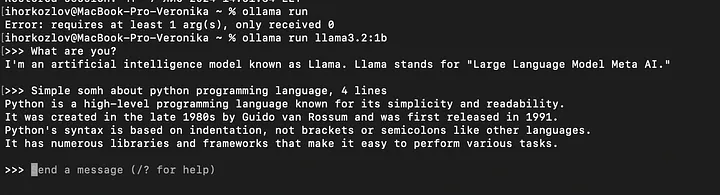

1. You can chat locally

```bash

ollama run llama3.2:1b

```

In this case, I sent a message to the llama3.2 version, with 1b parameters. You can specify those in the command line.

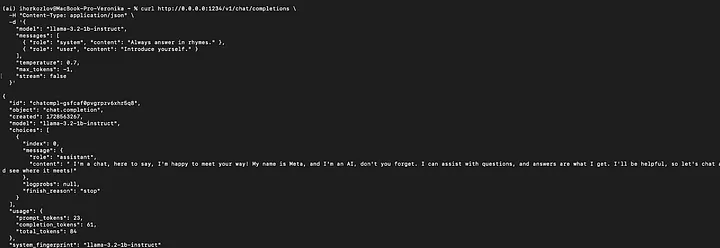

2. You can run a local server with OpenAI-like endpoints

```bash

ollama serve

```

And you can use it directly with OpenAI clients in your applications.

Don’t forget to run to unload your resources using:

```bash

ollama stop <model>

```

To sum up, Ollama is a great choice to run a locally deployed LLM. It simplifies the installation, configuration, and management of LLMs.

Running Models with LMstudio

LMstudio is an IDE for LLM setup, configuration, and even RAG (Retrieval Augmented Generation). As a developer, I prefer this to Ollama.

You can download LMstudio at https://lmstudio.ai/. Be careful! This is not yet a production release, but even at this stage, it’s a great tool that simplifies local development.

Like for Ollama, you can run a local server with your preferred LLM or make it accessible to your teammates on the local network. The LMStudio UI displays information about current resource usage and allows you to deeply customize your LLM.

Local server setup:

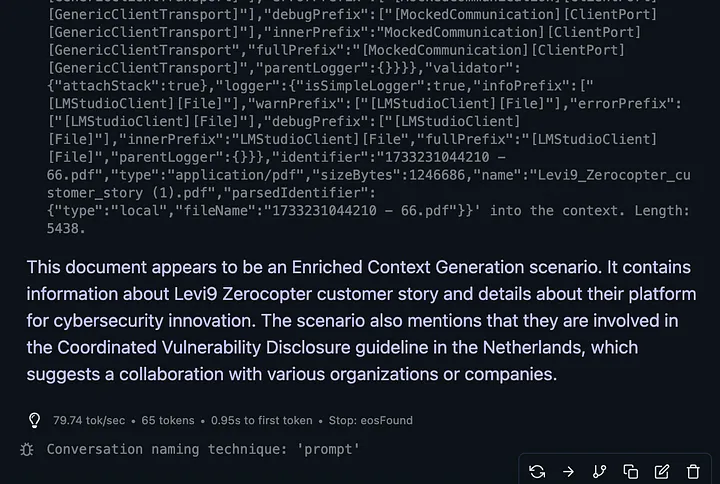

Chat with Documents

LMStudio allows users to upload their documents and ask questions to the LLM about them. The chat interface currently supports PDF, DOCX, TXT, and CSV formats. You can directly adjust the prompt in the chat interface and see how LLM reacts to it.

As you can see, the tool provides extensive logging directly in the chat interface if enabled. Also, depending on the document size, LMStudio chooses to put it directly in the context (perfect for long-context models) or to use a RAG process.

Overall, LLMStudio simplifies local LLM deployment. Its OpenAI-compatible API, server deployment capabilities, and user-friendly interface make it a popular choice among developers.

Your Turn: Try LLM Local Deployment

Local deployment offers more than just technical advantages. They offer increased privacy and the ability to operate offline, which are critical in industries handling sensitive information. Furthermore, the ability to customize models for specific applications enables the creation of tailored solutions that are aligned with organizational goals and user needs.

I hope you find the strategies and best practices in this article useful, and that you can now test local LLM deployments with greater confidence.