It was 3 AM and George Lambru, test developer at Levi9, was still hunched on his computer, running tests in Postman and SOAP UI for a Dutch risk and insurance analyst. The problem was massive: a major client had tried to upload half a million records, only for the system to fail over and over. It was late in the night when George managed to produce an Excel report of the app’s test results. That report and that crisis were a wake-up call for the client: waiting until a crisis to test your system’s limits isn’t just a bad strategy; it’s an unsustainable one. They had to find a better way.

When scaling errors out

Our client was a Dutch company specializing in fraud investigation for the insurance industry, had grown significantly over its 20-year history. The investigation of fraud involves two main stages: screening ( or verification), which involves searching for personal or business data, and identifying potential issues based on certain indicators. The company helps determine if a case of investigation should be dismissed or not. The investigation is based on the information obtained after the verifications, and the investigator decides whether to continue the investigation or not.

What started as a monolithic application serving a small client base built with legacy technologies evolved into a microservice architecture that services over a hundred companies. “The monolith was prepared for certain request volumes, but when new clients brought larger datasets, problems started appearing,” says Victor Munteanu, Levi9 Test Architect.

Legacy testing tool: JMeter

At the time, the performance testing solution was using JMeter, and the approach was entirely reactive – driven by client complaints rather than proactive prevention. “We chose JMeter because it was readily available and we had some experience with it from other projects,” Victor recalls. “Its Chrome driver integration was one of its highlights, because we could test both UI and backend components through multithreading, which was exactly what we needed at the time.”

However, JMeter has serious limitations. As Victor explains, “JMeter is not a framework but a desktop application where you configure components to run various tests and requests.” The majority of companies use JMeter for certain test scenarios, such as performance tests. If a company wants to conduct a performance test, they must create a standalone JMeter project with a specific target in mind, which means that for any new requirements, refactoring that project may take a long time, and this is not always justified.

It also has a steep learning curve. “It’s extremely difficult to teach someone who wasn’t born in the ’90s how to use it,” Victor notes with a hint of humor. “There are certain quirks you just have to learn, and the documentation is extraordinarily dense.”

The limitations of the testing process were clearly visible the night of the crash. It started with an engineering manager who requested an estimate for processing 2,000 files after a client attempted to upload 500,000 files and failed. George Lambru, who at the time was working on a different aspect for the Dutch client, was brought in to help with the testing and spent the night retracingthe performance issues in the application.

K6, an open-source alternative

After the incident prompted a wake-up call from the client, George was tasked with investigating how to set up reliable testing. He discovered K6, an open-source performance testing tool that offered a cloud option. Written in Go but using JavaScript for test scripts, K6 could also be easily used by developers to write their own tests when they created a new feature. “The fact that it used JavaScript was one of our key deciding factors,” Victor underlines. “We had already standardized on JavaScript across our testing stack, using Cypress and Postman, so K6 fit perfectly into our ecosystem.”

Performance testing on a budget

Initially, the team proposed using K6’s cloud services for testing and monitoring. However, this approach coincided with an aggressive cost-cutting campaign by the client. So George decided to look into alternatives using existing resources, eventually developing a zero-cost solution that used Azure DevOps agents, InfluxDB, and Grafana for visualization.

“We knew that we didn’t need to run tests in the cloud or on virtual machines,” Victor adds. “As long as the application is hosted properly, you can run tests from anywhere with sufficient bandwidth without affecting the results.” Performance testing can also be done on a budget.

How to run tests with K6

“A basic test can be as simple as an HTTP request with a method and endpoint definition, followed by a sleep command,” George explains. “From these basic building blocks, you can construct comprehensive test suites that include smoke tests, load tests, spike tests, and stress tests.”

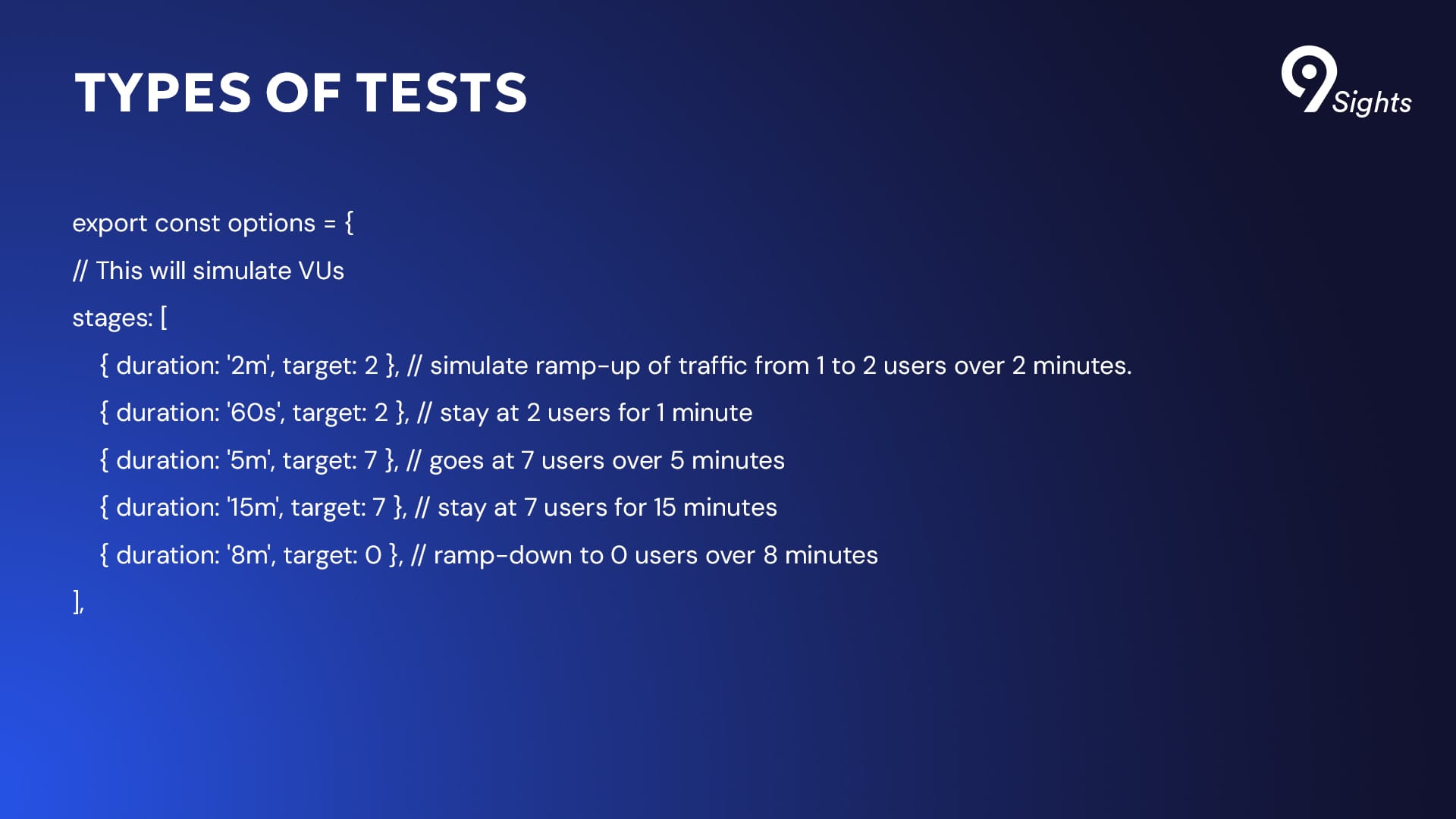

Another test that can be easily run with K6 analyses stages and ramping. “We can configure a test to ramp up from one virtual user to two over two minutes, maintain that load for a minute, then gradually increase to seven users over five minutes,” George illustrates. “The system then sustains that seven-user load for 15 minutes before ramping down to zero.” This granular control over virtual user counts and test duration provides the team with tools for simulating real-world usage patterns and stress testing the system under various conditions.

Grafana for visual granular metrics

Once the test is over, K6 provides a top-level overview of results directly in the console, which can be customized to highlight specific metrics of interest. “The default output is quite simple,” George explains, “but we’ve customized it to include specific indicators marked in green for better visibility.”

For more aesthetically pleasing visuals, the metrics are stored in InfluxDB and connected to Grafana. The bottom line is also easier to see: the testing performance system clearly highlights what the slowest requests are and what needs to be fixed.

“The graphical representations are very appreciated by the product owners,” Victor notes. “They are much easier to understand than JMeter’s complex tables and reports.” Anyone can see what is performing well and what needs attention in an instant.

Performance testing in KPIs

After a successful proof of concept, Levi9 now works with the Dutch company to evolve its performance testing approach. “We’re currently setting up performance pipelines as one of the company’s KPIs,” George notes. Performance testing is now integrated into their software development lifecycle, with regular testing scheduled during regression testing phases. The team maintains dedicated tenants for performance testing, ensuring they can accurately simulate production loads without affecting client environments.

The late-night crisis had a happy end: it transformed how Levi9’s client approaches performance testing. As Victor concludes, “The key is understanding what the client actually needs. For some, it might be performance. For others, it might be device coverage or UI testing capabilities. Focus on those specific needs, and build your solution around them.”